By Stuart Geiger, ethnographer and post-doctoral scholar at the Berkeley Institute for Data Science | Permalink

I’ve been working on a research project about counter-harassment projects in Twitter, where I’ve been focusing on blockbots (or bot-based collective blocklists) in Twitter. Blockbots are a different way of responding to online harassment, representing a more decentralized alternative to the standard practice of moderation — typically, a site’s staff has to go through their own process to definitively decide what accounts should be suspended from the entire site. I’m excited to announce that my first paper on this topic will soon be published in Information, Communication, and Society (the PDF on my website and the publisher’s version).

This post is a summary of that article and some thoughts about future work in this area. The paper is based on my empirical research on this topic, but it takes a more theoretical and conceptual approach given how novel these projects are. I give an overview of what blockbots are, the context in which they have emerged, and the issues that they raise about how social networking sites are to be governed and moderated with computational tools. I think there is room for much future research on this topic, and I hope to see more work on this topic from a variety of disciplines and methods.

What are blockbots?

Blockbots are automated software agents developed and used by independent, volunteer users of Twitter, who have developed their own social-computational tools to help moderate their own experiences on Twitter.

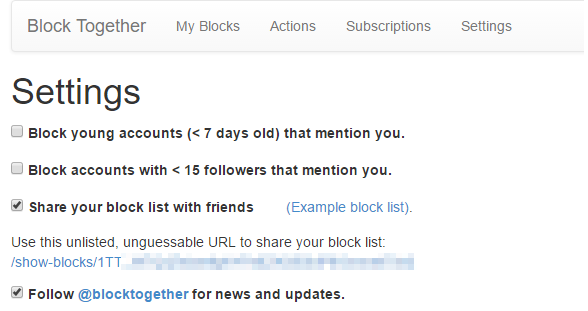

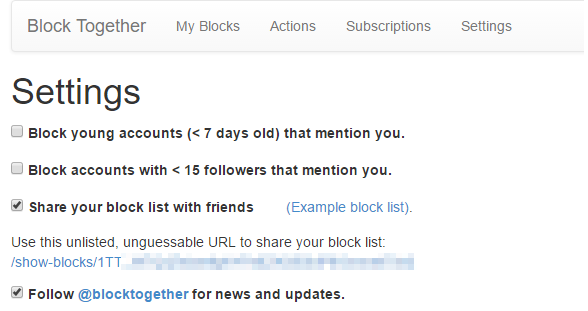

The blocktogether.org interface, which lets people subscribe to other people’s blocklists, publish their own blocklists, and automatically block certain kinds of accounts.

READ MORE