The Powerful and the Powerless: Reflections from FAccT 2020

By Zoe Kahn, CTSP Fellow 2019

Images of people soaring through space wearing astronaut helmets adorn the walls of a narrow hallway leading from the street-level entrance to the hotel lobby elevator bank. A life-sized model of an astronaut stands off to the side of the hotel registration desk. It’s safe to say… the conference hotel has an outer space theme.

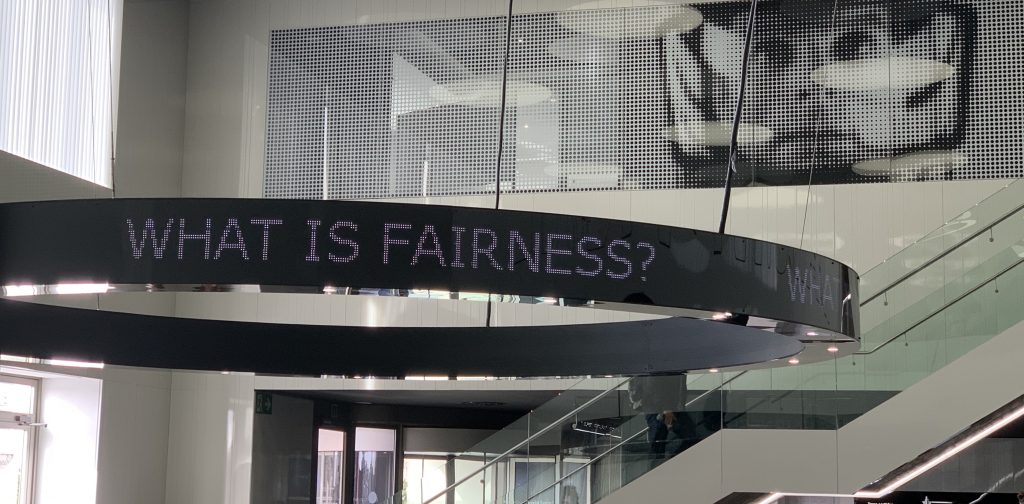

The words “What is fairness?” repeat on a large circular digital display at the center of the hotel lobby. I can’t decide if the display belongs in a newsroom, stock trade room, or train station. Perhaps art? Perhaps commentary?

The word in question changes each day. What is Fairness? What is Accountability? What is Transparency? What questions are we missing?

It is here—in Barcelona, Spain—that ~600 people gather for the ACM Fairness, Accountability, and Transparency (FAccT) conference to discuss efforts to design, develop, deploy, and evaluate machine learning models in ways that are more fair, accountable, and transparent.

Despite the conference’s roots in computer science, there were several papers, tutorials, and CRAFT sessions that challenged the community to think beyond technical fixes to broader socio-technical contexts rife with power and complex social dynamics.

Let me begin with a discussion of power. The ACLU of Washington and the Media Mobilizing Project put on a terrific CRAFT session titled, Creating Community-Based Tech Policy: Case Studies, Lessons Learned, and What Technologist and Communities Can Do Together. The two-part session first documented the ACLU of Washington’s efforts, in partnership with academics primarily from the University of Washington (Katell et al., 2020), to include marginalized and vulnerable communities in the development of tech policy surrounding the acquisition and use of surveillance technologies in the City of Seattle. The Media Mobilizing Project then presented their work investigating the use of risk assessment tools in the criminal justice system across the country. And, in particular, their efforts with community groups to combat the use of criminal justice risk assessment tools in Philadelphia. Taken together, the session provided models for meaningfully engaging marginalized and vulnerable populations in algorithmic accountability, forming the foundation for workshop participants to reflect upon their own power.

The workshop portion of the CRAFT sessions asked us—participants—to reflect on our own power. Perhaps what was most surprising was to be in a room of people with ‘power’ who, in many ways, felt powerless. Professionals at corporations felt they were not able to meaningfully influence their product directions; young academics felt their marginalization in the face of bureaucratic academic institutions; funders felt at a loss for how to develop funding strategies in countries that lack democracy.

If you’re interested in tech policy and participatory or co-design methodologies, I’d recommend reading Katell et al.’s paper, Toward Situated Interventions for Algorithmic Equity: Lessons from the Field. The paper importantly documents lessons learned from working with the ACLU of Washington State to engage marginalized and vulnerable communities in the development of tech-policy around the adoption of surveillance technologies in the City of Seattle.

The discussion of power did not end there. Barabas et al. challenged the FAccT community to ‘study up’. The term, drawn from anthropology, calls for researchers to study people and organizations of power, instead of marginalized and vulnerable communities. Barabas et al. argue that reorienting to ‘studying up’ will require asking a different set of questions. If the questions we ask uphold institutions of power so, too, do the findings and recommendations that emerge. As one example, developing algorithmic systems to more accurately predict criminality fundamentally upholds the criminal justice system. We must instead ask questions that allow us to challenge the broader structures of power and inequality.

Importantly, this paper also provides researchers and data scientists with guidance for refusing projects with questionable bases. How as researchers or data scientists brought onto projects with governments or other organizations with questionable underpinnings, do we refuse and say that we will not work on a particular project? Rather than seeing refusal as a dead end, the authors draw on the work on Simpson (2014) arguing that “refusal can structure possibilities, as well as introduce new discourses and subjects of research by shedding light on what was missed in the initial research proposal” (Barabas et al., 2020: 173).

For a case study on ‘studying up,’ including a terrific discussion of grappling with when and how to refuse proposed research, I’d recommend reading Barabas et al.’s paper Study Up: Reorienting the Study of the Algorithmic Fairness About Issue of Power.

Taken together, these papers point to new opportunities in the FAccT community to meaningfully engage impacted communities while, at the same time, questioning the larger structural systems within which we live. With that, we ask ourselves: what questions are we missing?

Zoe Kahn is PhD student at the UC Berkeley School of Information. She is a 2019 joint-fellow with the UC Berkeley Center for Technology, Society, and Policy, Algorithmic Fairness and Opacity Working Group, and Center for Long-Term Cybersecurity. Her attendance at the ACM Fairness, Accountability, and Transparency Conference in 2020 was made possible by support from the UC Berkeley Center for Technology, Society, and Policy.

Sources:

Chelsea Barabas, Colin Doyle, JB Rubinovitz, and Karthik Dinakar. 2020. Studying up: reorienting the study of algorithmic fairness around issues of power. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (FAT* ’20). Association for Computing Machinery, New York, NY, USA, 167–176. DOI:https://doi.org/10.1145/3351095.3372859

Michael Katell, Meg Young, Dharma Dailey, Bernease Herman, Vivian Guetler, Aaron Tam, Corinne Binz, Daniella Raz, and P. M. Krafft. 2020. Toward situated interventions for algorithmic equity: lessons from the field. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (FAT* ’20). Association for Computing Machinery, New York, NY, USA, 45–55. DOI:https://doi.org/10.1145/3351095.3372874

Audra Simpson. 2014. Mohawk interruptus: Political life across the borders of settler states. Duke University Press.

bagio

November 13, 2020 at 7:30 amthank for information

Ozgur

November 28, 2020 at 2:48 amThanks.